MVCC stands for Multi-Version Concurrency Control. It is the basic transaction isolation idea that stands behind many transactional systems and allows different processes see different version of truth for the same data. Considering DBMS system, when you are running the query that performs “update” of a specific number of records in the table, you should guarantee specific transaction isolation: if you run “select” in parallel with this “update”, you most likely want this “select” to see the data that was in the table before the “update” has started and not the “dirty” data that was created by this “update” (that might be rollbacked as well as committed).

The solution to handle this particular problem is MVCC – you need to store a number of versions for each row of the table that got changed. This data should be stored somehow and somehow maintained. I will discuss a number of approaches to make it.

Postgres way

When you create the table, it is splitted in blocks called “pages”. Each block has the size from 8KB to 64KB. This value should be chosen at PostgreSQL compile time, by default it is equal to 8KB. PostgreSQL stores changes in the table inline. Each row has 4 metadata fields: xmin, xmax, cmin, cmax, where the “x” fields are related to transactions, and “c” to the commands inside a single transaction. First let’s focus on xmin and xmax. Xmin determines the transaction that created the row. Row created by the rollbacked transaction is not visible for any other transaction. Row created by transaction that is not yet committed is again invisible for concurrent transactions. This means that each transaction should be aware about the rollbacked transactions and running transactions. But how can the transaction be aware about the other transactions that are started after the current one? It is simple: transaction id is simply incremented and if transaction id of some concurrent transaction is greater than the id of the current one this transaction changes is invisible for current transaction. As for Xmax, it contains information about the row deletion. If it is not set – row is visible, if it is set to the ID of rollbacked transaction – row is visible, if it is set by some other running transaction – row is visible, but if it is set by some committed transaction that was committed before current transaction started – it is invisible. Cmin and Cmax are just the same for a single transaction: within a transaction you can insert and delete the same row many times and the transaction should be aware whether the row is visible or not for the current command.

So in general, it works really simple:

- When you insert data, you insert the row with xmin equal to current transaction id and xmax set to null;

- When you delete the data, you find visible row that should be deleted, and set its xmax to the current transaction id;

- When you update the data, for each updated row you first perform “delete” and then “insert”.

All of these changes are stored on the same pages of the table. To be able to track the pages with free space PostgreSQL maintains Free Space Map that has the information about the block and amount of space available in it. In this case if you have 1KB row and you want to update some integer column in it, you will create its copy that will occupy another 1KB, and this copy would be placed in the same file (if the process finds some space in FSM – it would be inserted there, otherwise it would be written to the end of the table).

In this case you need a specific process that would perform the cleanup of the table to remove dead rows, and this process in PostgreSQL is called “vacuum”. It goes through the table and for each page it removes dead tuples that are not visible by any transaction and puts the data to the end of the block. It does not move the tuples across the pages. Also this process updates FSM with the information about the blocks that have free space.

Oracle way

Oracle stores data in blocks, the default block size is 8KB. It can be specified for each tablespace separately. Oracle tends to store changes in the UNDO log. This way the table contains only the actual data, while previous state can be reconstructed by reading related row from the UNDO log. It is done in the following way:

- Data is inserted directly to the table. When the data in a block is processed, Oracle inserts transaction identifier to the block header, which allows you to find a related UNDO. Insert also adds some small record to UNDO log containing the information that this row is not yet visible;

- When the delete is performed, the whole row is put into the UNDO log;

- Updating transaction updates data in place and generates the UNDO vector, which contains source data for the changed columns only. For instance, if you have a hundred columns in a table and you’ve updated one of them, the update would be done in place (if possible) and the UNDO will contain the old value only for the updated column, not for all of them. Here’s an example of what happens within update:

This way when you read the data in Oracle you also need to read UNDO logs for the blocks that are locked by some other transactions to be able to reconstruct the data. UNDO log itself is the ring buffer, which means that the thing you can control is the size of this buffer, and it affects the time you can access “old” snapshot data. If you have a DWH running on Oracle you should have faced an error “snapshot too old” many times and it means that the data that your transaction needs to read from UNDO log has been already removed from there (overwritten) and you cannot reconstruct the snapshot of the table as it was when this particular statement or transaction has started.

Hive way

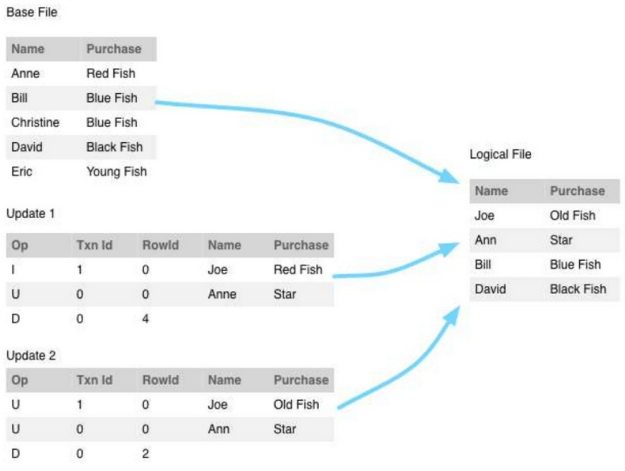

As you might know, HIVE-5317 adds the functionality to execute UPDATE and DELETE on top of the Hive table. HDFS filesystem is append-only and you have no option to update the data in place like you do it in Oracle or update row visibility transaction ids like you do it in PostgreSQL. So how is this implemented on top of append-only storage?

At the moment this functionality is supported only for ORCFiles and the key why it is implemented this way is the fact that in ORCFile each row has a row id, which uniquely identifies the row in the table. It works this way:

- Registers new delta file in metadata and writes the inserted rows there. Commit only changes the metadata in Hive Metastore;

- Registers new delta file in metadata and writes updated rows there together with their row ids. No other manipulation with old rows are performed;

- Registers new delta file in metadata and writes information about deleted row ids;

- It is the most interesting part. Selects check which delta files are committed and performs n-way join to read the data: joins the table itself with all the delta files and analyzing delta file it determines, whether the row is deleted or updated, and which the actual version of the row is.

As you should mention here, this approach generates many delta files that should be somehow maintained. This maintenance is straightforward and called “compaction”. There are two types of compactions:

- Minor compaction. Merges the delta files in a bigger delta files. It is usually triggered when you have more than 10 delta files for the table. This merge is straightforward: it performs n-way join of this files by row id and determines for each row its final state;

- Major compaction. Merges the table datafile with the deltas. It is usually performed when 10% of the table rows are updated.

This way you can easily have an update and delete support on top of append-only filesystem having the metadata stored in traditional DBMS.

Summary

MVCC is an essential part of any transactional system and if you are working with any of them it would be really useful for you to understand how it works and what can be adjusted in order to make it work better. For instance, wrong policy of vacuum in PostgreSQL might result in table bloat and even in data loss if the transaction id overlap will occur, for Oracle you might get many issues with the “snapshot too old” if you don’t manage your UNDO in the right way. With Hive you might have a huge performance issues if you would have a big amount of small updates causing continuous small compactions. Know your system.

I also wrote an article about how MVCC works in PostgreSQL with diagrams for INSERT, UPDATE, and DELETE statements:

https://vladmihalcea.com/2017/03/01/how-does-mvcc-multi-version-concurrency-control-work/

Let me know what you think.